In this issue:

- Antithesis: Debugging Debugging—Brian Kernighan once said that "Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it." But computers are a good source of systematized cleverness, and Antithesis, a startup just now emerging from stealth, is building better tools for squashing bugs.

- Rebooting—Cruise has hired a new head of safety, a signal inside and outside the company.

- AI, UGC, and Communities—Businesses that have data sometimes find that their legal ownership of it doesn't line up with the expectations of the people who provided it.

- Land Rushes—The cost of landing new SaaS deals is finally declining.

- AI and Share Shift—Microsoft wanted to take market share from Google but took some from Amazon instead.

- Nirvana—Heavily-shorted stocks are finally doing what they're supposed to do: dropping.

Antithesis: Debugging Debugging

Programming is fun and lucrative, but it's also a bit of a misnomer: over time, "programming" becomes less a process of writing code that makes a computer do something, and more of a process of fixing code because the computer does the wrong thing, writing code to add feature X without breaking feature Y, or even broader organization-level debugging—"Why did we spend the last two weeks working on something that got canceled three weeks ago?"

Some of this is the inevitable result of computers faithfully executing the directives of intrinsically flawed human beings. But some of it is probably because software engineering, as a discipline, really hasn't had a chance to slow down, catch its breath, and figure out what it's doing. That’s not the case elsewhere: when a plane has a bug, it's in the news cycle for weeks, partly because the potential consequences are so dire but also because it's front-page news; plenty of other things with similar risk to life do not hit the same newsworthiness. (Total recorded air travel deaths since 1970 sum to under 84,000, with a steep decline since the 1980s, which is a bit more than the number of road accident fatalities in a typical month.) It's news because the aircraft industry takes safety and correctness seriously. But that comparison should tell you something else: air travel deaths in 2023 were down about 90% from the 1970s, but global passenger count is up ~9x. So the industry has gotten, roughly speaking, 100x safer.

It's hard to find good data on this, but I would bet that over the last few decades, the number of bugs per line of code has not in fact dropped by two orders of magnitude. This would be tricky to measure. "Lines of code" is a convenient metric because it means that some variant of wc -l is a good quick proxy for how complicated a project was. It will, at least, distinguish between "I wrote a script to regularly copy my downloads to Dropbox" and "I wrote a new operating system." But what you'd really want to look at is something closer to bugs divided by number of nodes in the abstract syntax tree. But even deeper than that, you need some measure of the uniqueness of operations, and the difficulty of bugs. It's not enough to count up the number of bugs: you also want some sense of how damaging they are (does the program emit a message on a console but keep on working, or does some physical machine controlled by the program break in a catastrophic way?). And you need some measure of the cost of correcting them: a compiler might helpfully inform you that you dropped a semicolon, but your compiler will probably not say "This code's documentation exists entirely in the head of Gary, the coder who wrote it, who quit six months before you joined the company and does not remember what this program does or why it's breaking." Even worse, if the bug is the intersection of a software problem and a hardware issue, reproducing it means doing something unknown to the state of physical machines and their connections, not the software itself—the mere existence of such bugs is a measure of how hard they are to fix.

So solving bugs means coming up with a theory of how programs work in theory, how they work in practice (i.e. once they're running on physical machines with drives that can fill up, processors that can overheat, networks that can drop packets, etc.), and how the practice of programming works—what is going through someone's head at t=0 that will cause some catastrophic bug to be revealed at t=a very inconvenient time, such as unusually high usage stemming from a successful product launch. Antithesis has been building such a system. Which works, incidentally; instead of client logos, their homepage links to issue trackers and pull requests highlighting bugs they've found.

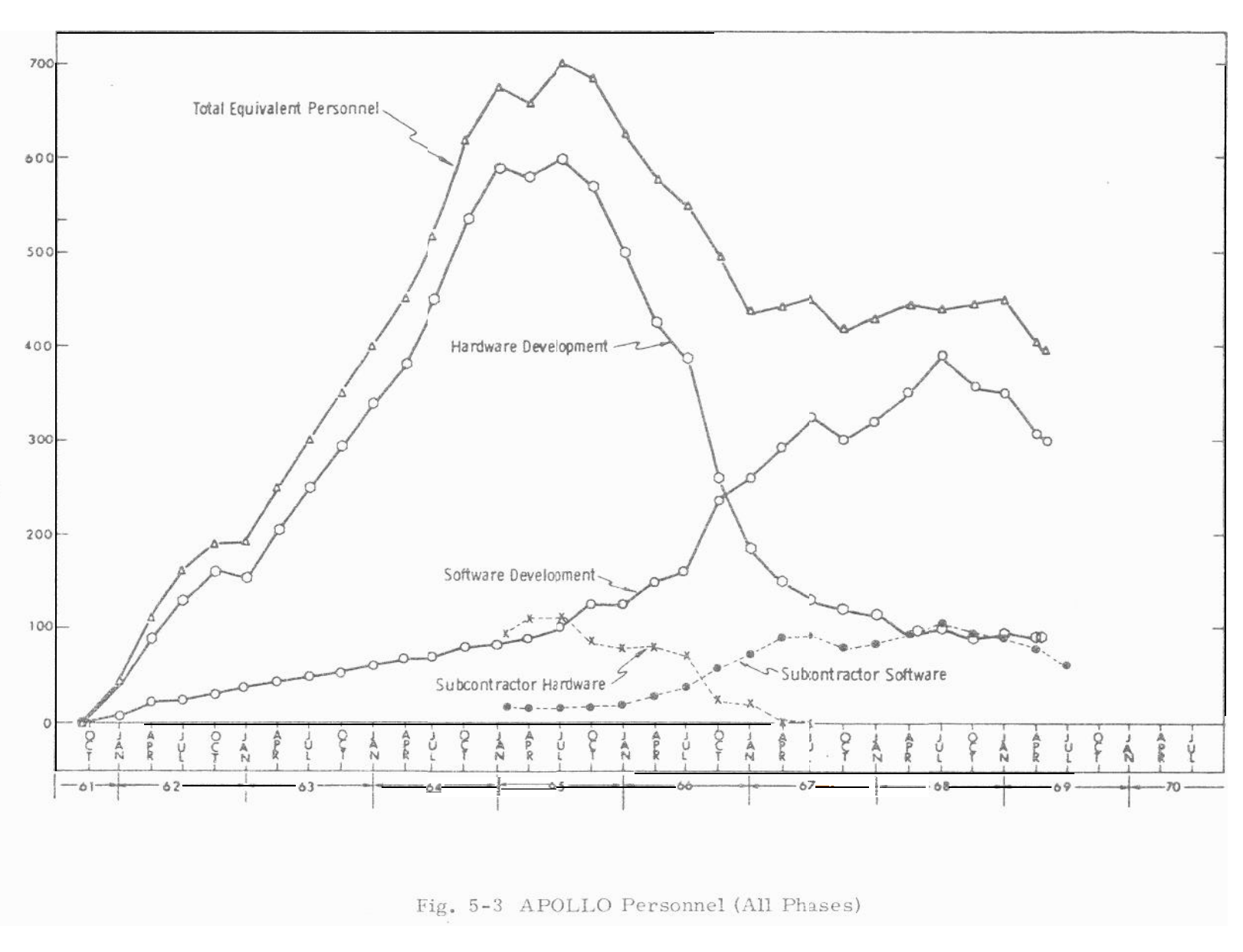

"Finding bugs" is an incredibly broad problem. It starts with how fundamentally non-deterministic software development is, a problem that's been known (if you know where to look) since at least the 1960s. When NASA and MIT developed the Apollo guidance computer, they assumed it was more of a hardware problem, but once the hardware was done they had to keep staffing up on the software side to actually get things running:

Finding problems in software is hard for roughly the same reason that predicting asset prices is hard. To find a bug, you're building a mental model of someone else's mental model of a problem, and then investigating it for flaws. But the mental model itself is the programmer's best effort at formalizing the problem.

One solution is a brute-force approach. Take every function, give it every possible input, and, for a sufficiently complex program, you can prove that it's secure some time after the heat death of the universe. Programs are combinatorially hard. For example, my keyboard has 47 keys that enter a character which can be altered by holding down shift. So adding the space bar, that's 95 possibilities. Exploring all possible 16-character sequences means testing 95^16 possibilities. If you test one trillion per second, it will only take you 1.4 trillion years to discover a magic sequence of text that used to reliably crash Chrome when entered into the address bar or clicked as a link.

So the pure brute-force approach is hard. But actually, we've been testing things on easy mode, because we're talking about a single application that runs on a single machine. The really fun errors happen when there's a distributed system that has cascading failures: a hard drive fills up, but that's okay, because there's a backup; the relevant service writes a message to a log and waits for an acknowledgement before it starts the process of switching to another drive; the packet gets dropped and it keeps on waiting, accumulating information in memory until it runs out of memory. Now you have a memory problem on machine A caused by a dropped packet from machine B, but that dropped packet was caused by an issue on machine A.

There's a critical pair of insights about bugs in distributed systems in particular:

- They tend to be the result of a chain of low-probability outcomes that are very unlikely to emerge naturally, but

- A system is "distributed" if it has enough users that it can't be hosted by a single machine, i.e. it's a fairly popular service. And that means it's very likely that a system big enough to be distributed will also be used by enough people, in enough ways, that they'll inevitably encounter bugs.

The longer it takes for this to happen, the worse the bug. That's along two axes. First, if it took a long time to discover, it's probably a chain of one-in-a-thousand coincidences that all occurred at once. It's hard to reproduce. But, even worse, the longer the time between the bug being written and it being discovered, the less context developers have for debugging it. The easiest bug to fix is the one that you just wrote: you remember what you were trying to do, you see an error on your screen, you can immediately start incorporating that bug into your mental model of the problem and the solution to see where things broke down. In computing terms, the relevant data is conveniently cached. But some bugs happen years after the fact. If they're a bug in code someone else wrote, you have to reproduce their thought process, after the fact, and to figure out how it's supposed to work before you figure out what broke it. Even if you wrote the code, the "you" who wrote it might as well be a stranger. It's a useful stylized fact about the human mind that we have our own garbage collector, and our brains quickly wipe unused facts.

So you need another approach. The general sketch of what Antithesis is doing is that it's building a model of the entire system—the program, the hardware, simulations of external APIs—and running it in a deterministic hypervisor that's guaranteed to produce exactly the same state given the same inputs.[1]

And what are they doing with this system?

A smart autonomous testing product learns in a very human way: it seeks out areas of maximum uncertainty and figures out how to collapse that uncertainty. This is something that shows up across different domains: chess players study games partly to memorize specific strategies and partly to internalize the mindset that would lead to victory (or, equally importantly, to reverse-engineer the thought process that leads to defeat and figure out how to avoid it). Investors look at historically great trades and think about how they'd avoid bad calls. Musicians practice a difficult passage slowly enough to get every note right, then at full speed, then back to slowly again. In every case, careful learning means speeding past the regions of a domain where there aren't many surprises, and zooming in on the places where uncertainty lurks. And then there's a departure. A human learner will either start figuring out generalizable principles or decide to accept some limitations. A computer has a third option: applying brute force, but only in places where it pays—find the functions or states that are statistically likely to lead to bugs, and exhaustively test those until the cause of the bug is known with p~=1.

Or less! Some bugs just don't matter that much, because this approach can actually find issues so obscure that, statistically, a user won't care about them. This shifts the problem from a technical one to a business issue. If the application in question is a game, and it would need 10x the usage for 10x the usual life of a similar game for there to be a 1% chance of a player encountering the problem, and if the impact of that problem is that the game crashes but they won't reach that state again, it's probably a prudent business decision to ignore it. If the product in question is software controlling a surgical robot, or backs a database that will be used to store critical information, a zero-tolerance approach to bugs may be the right course. In fact, Antithesis' origins lie with FoundationDB, which built a similar tool as an in-house tool for testing their own product.

The economics of this start to get very interesting. First, there's the usual dynamic with any kind of developer tool: it's a complement to the time and attention of some of the most expensive workers on earth, which is always good for pricing power. It also has a subtle network effect: in the discussion above about simulating a program and tracking its state at every stage, I noted that you'd need a "simulation of external APIs." That's required for a deterministic computation; you lose determinism if the API you call sometimes returns an error because of a bug on the customer's end. But that also means that, to an Antithesis customer, their confidence in their own code is ultimately bounded by the confidence they have in third-party providers. That level can be high, but may not be infinite.[2] So Antithesis' customers' customers will hear about the product, as will their customers' other suppliers. Over time, if it works, it creates islands of stability and trust in an industry that can't always offer either.

A simplistic model of development is that there's some amount of time spent making code that does things, and some amount of time spent making code do what it was intended to do. But that's a limiting view, because it omits the continuous process of building and finessing a mental model. And what autonomous testing really offers is a tool for carefully reasoning about what a program really does. And that can't help but affect people's thought processes and coding processes. Writing a buggy line of code sometimes produces a definite sensation, either of "I'm not sure this will work" or the even more dreaded "this works, but I'm not sure why." So it points to uncertainty about the programmer's mental model itself.[3] Having a tool that automates bug detection and highlights potential trouble areas will end up being like working alongside a senior programmer, but a senior programmer who doesn't have anything better to do than to quickly run the code you just wrote through a million simulations to see if there's anything surprising about it. It's a kind of tacit knowledge of the program itself, where you get a feel for the execution path by seeing where that path meanders in surprising ways.

This is, in a sense, a return to programming tradition. In a mid-80s interview, Bill Gates said "[A]ll the good ideas have occurred to me before I actually write the program. And if there is a bug in the thing, I feel pretty bad, because if there’s one bug, it says your mental simulation is imperfect. And once your mental simulation is imperfect, there might be thousands of bugs in the program." That's a throwback to a world where every byte is precious and every wasted instruction is intolerable, where programs are a sort of jigsaw puzzle that tries to pack the maximum conceivable functionality into very finite hardware. Even at the time that he gave the interview, Gates knew that world was fading as programmers switched to higher-level languages. Those languages made development a lot faster, but meant that developers were interacting with an abstraction that mapped to hardware, not the hardware itself. Which was a win, but which also encouraged piling on more abstractions, and creating more software that interacted with other software, until the whole system was nightmarishly complex. And we're not going back to writing everything in Assembly and seeing how much we can fit into 8kb of RAM. But we can go back to the result of those constraints, a deeper knowledge of exactly what the computer does when you tell it what to do. Gates continues: "I really hate it when I watch some people program and I don’t see them thinking." Now the good ones will keep thinking, but they'll have help.

Disclosure: Antithesis works with Diff Jobs. One of the fun parts of this is explaining what the company is working on, hearing "Oh, that's trivial," then "Oh, no, that's impossible" and then, after a while "Wow, that just might work!"

"Exactly" means that if you run it on two machines and take a hash of memory at a given point, you're getting the same output. This is a nontrivial problem, but it's solving for a tradeoff: to reproduce a sufficiently tricky bug, you either need to reproduce the exact state multiple times, or you need to exhaustively cycle through potential states until you stumble on it again. Googling around for "deterministic hypervisor" shows some research projects that attempted it and papers advocating for it, but not live examples. ↩︎

There are more than a few companies that will insist on developing every mission-critical piece of software they use in-house, even if all they're doing is expensively replicating a subset of a commercially-available tool. For them, the cost of either downtime or uncertainty about it is intolerable. But this is fairly rare; even if the impulse is more common, the capacity to pull it off and the judgment to do it when it actually makes sense will be rare. ↩︎

That's different from the sensation of writing something that looks like it shouldn't work, but actually works perfectly. That feels more like coming up with a good joke. ↩︎

Elsewhere

Rebooting

Cruise, which shut down testing of its driverless cars in October and laid off a quarter of its staff in December, has hired a new chief safety officer. Org-chart news is sometimes the kind of thing that's worth covering from a business perspective for the same reason obituaries were a key part of the local newspaper model: any way to write news about someone your reader personally knows is a good opportunity to take. But org chart changes are also a way for companies to publicly signal priorities and continuity: Cruise drops cash and equity (possibly) to bring on someone new, who signals externally that the company is taking safety seriously and signals internally that Cruise employees shouldn't be interviewing quite so aggressively at Waymo.

AI, UGC, and Communities

Fan wiki host Fandom has rolled out new AI tools that let users ask questions about fictional characters and get answers derived from fan-generated text. Fandom is one of those companies that has received a large unexpected dividend from the AI boom, because they have a large supply of training data in the form of user-contributed content, and because they have plenty of demand for informative answers via search traffic. Right now, their main struggle is to get wiki moderators are users on board with being unpaid contributors to an AI tool, but over time the bigger problem is that inline AI in search can displace their business entirely.

Land Rushes

A natural feature of economic systems is that as new money flows through (whether it's the result of some new source of market-based demand or some top-down mandate), the usual result is that in some parts of the economy supply will expand, and in the parts where it doesn't, prices will rise. Something similar happens on the demand side when there's a supply shock: some consumers curtail purchases, and those who can't pay up instead. The world of SaaS went through both cycles at once: when funding was easy, companies raised money to fund land-rush strategies, and the average cost to close a deal soared. When funding was cut, they did less of this, but that was offset by an even larger drop in demand, so the cost of landing a new deal actually kept going up before moving back down in the last few quarters as things normalized ($, The Information). The truly insidious thing about having your outcomes affected by, or even determined by, macro factors is that it doesn't feel like there's an external temporary force pushing things in some direction, just like that's the natural backdrop for this sort of business.

AI and Share Shift

Around this time last year, Microsoft started talking about market share. Like money and class, market share is the kind of thing where there's less and less upside in talking about it the more of it you have, but in this case they were talking about search, a category Google dominates. But it turned out that the most significant share shift was in the cloud, where analysts used to estimate that Azure was half the size of AWS, but where they now estimate that it's three quarters the size. Much of the recent share shift is in AI, where the long-term economics are unclear. On one hand, Microsoft's incentive is to keep prices low in order to win and maintain share. On the other hand, there's uncertainty about both long-term AI demand and how much the cost curve will bend at scale. For a long time, AWS was a big bet whose costs were more certain than its upside; it's only fair that Microsoft would have to make a bet of similar magnitude to keep up.

Disclosure: Long MSFT, AMZN.

Nirvana

For equity hedge funds over the last few years, the general story has been that buying is tricky but shorting is terrifying. Years after the peak of the meme stock, small companies with dubious economics are still prone to spiking without warning, skewing the risk/reward and forcing investors to spend time on diversified short books in order to maintain their target risk exposures. But so far this year, the short side has done quite well ($, FT), while many of the quality AI bets have also kept moving in the right direction. Hedge funds are cyclical at many different time scales, and the shortest of those is driven by gross exposure at other hedge funds: when volatility spikes, they reduce all of their positions, and this tends to mean that the most-shorted stocks go up and that stocks with high short-term momentum drop. But when markets normalize, both of those strategies work well.

Byrne Hobart

Byrne Hobart