Electrocloud!

Mark Zuckerberg once described his long-term vision for Facebook by comparing it to a utility: "Maybe electricity was cool when it first came out, but pretty quickly people stopped talking about it because it’s not the new thing." Well, Mark, some of us do have plans to keep talking about electricity.

In my post a few weeks ago, on why the power went out in Texas, I noted that there are interesting parallels between cloud computing economics and electrification. As it turns out, I'm not the only person to make the comparison; a reader sent along this video of Jeff Bezos making a similar argument in 2003:

So there's a much better analogy [than a gold rush] that allows you to be incredibly optimistic and that analogy is the electric industry. And there are a lot of similarities between the Internet and the electric industry... One of them is that they're both sort of thin, horizontal, enabling layers that go across lots of different industries. It's not a specific thing.

The electric industry is, indeed, a very pure, horizontal one. Whether you're powering lights, a data center, appliances, manufacturing equipment, a movie theater, or a Peloton, you're using the same electrons (maybe the same very busy electron!). It's close to a universal commodity.1 Bezos' talk was in early 2003, which was before the launch of the modern AWS, but just a few months after the launch of a product that had the same name and inspired it. Bezos clearly had universal commodities on the brain, and he was making the right analogy.

For example:

AWS and electricity both benefited from open platforms that enabled novel use cases. The first killer app for electricity was lighting, and for a long time most appliances screwed into light sockets.

Cloud computing and electricity both show very favorable price elasticity: cut the cost, and not only do people use it more, but they find more uses for it.2

Meanwhile, scale drives lower prices; usage quickly reaches previously inconceivable levels.

They both broadly decentralize at one level by being increasingly centralized at a different level. Fewer companies need to own datacenters, even though aggregate datacenter investment is rising; the original grid was a series of microgrids, but after a while they couldn't compete on cost with centralized access.

More trivially, but interestingly: Samuel Insull, who deserves credit for figuring out how to scale the electric utility business in something resembling its modern form, got his first big break by working as an assistant for Thomas Edison. "Assistant" in this case meant shadowing Edison 24/7, paying his bills (and delaying his creditors), fixing broken machinery, and later running one of Edison's most successful subsidiaries. Andy Jassy, incoming CEO of Amazon and the executive in charge of AWS for most of its existence, had an early stint... shadowing Jeff Bezos as his technical assistant.3

Also somewhat trivially: for decades, Samuel Insull personally answered customer inquiries at his utility companies, well past the point at which that made economic sense. He was not alone in this practice.

It's easy to miss how transformative some technologies are, because they're so life-changing that you don't bother to ask what the more primitive version would be. And it's also easy to miss them because, the more general they are, the more second-, third-, and nth-order effects dominate. For some preindustrial advances in naval technology, for example, the big side effects were deadly pandemics. And for both electricity and cloud computing, one of the subtle but impactful changes is a shift in how companies are financed—which means a shift in which risks risk-takers are able to take.

Electrification: The Early Days

Electricity went through several stages: a novelty, a plaything for the rich, a way for town boosters to set themselves apart from neighboring villages, and eventually a ubiquitous tool. Along the way, it went through several boom-bust cycles; Thomas Edison was a perennially poor manager, and had a knack for doubling down at the worst possible times. While he ended up with a substantial fortune, he ultimately lost control of his company to better-capitalized financiers (among them his marquee residential lighting customer, J.P. Morgan).

The first grids were basically microgrids: house-, neighborhood-, factory, or streetcar-level generators that provided local power for specific uses. They worked, but were inefficient, and scaling meant buying more generators at the same efficiency (as well as digging up lots of sidewalks to place wires, or risking gruesome accidents with overhead wires.

Aside from poor scaling, early grids had another problem: they didn't understand their business model. Edison would essentially agree to supply all of a customer's electricity needs at a set price, and try to make money by selling them lightbulbs and other equipment. This gave Edison the perverse incentive to make lower-quality bulbs that would need to be frequently replaced—an incentive he didn't follow, because he seemed to revel in constantly improving products. As a result, electric company economics got worse over time as the technology got better.

Edison's former assistant, Samuel Insull, took control of a small utility in Chicago in 1892. Working for Edison gave Insull two kinds of training: he learned how electricity was generated, how it was sold, how it was used, and what could go wrong. He also learned about financial engineering. Edison was chronically short on cash, and it often fell to Insull to juggle late invoices to keep the operation going. Meanwhile, Edison often paid employees in shares of his various subsidiaries—a combination of stock-based comp and insider trading-based comp, since it was perfectly legal for those employees to hold, buy, or sell based on the information they had about each company's performance.

Insull discovered a better pricing model on a trip to England: electricity could be metered at the point of consumption, so users could be charged based on how much they actually needed. This had two benefits:

- The obvious one, that electric companies could actually sell electricity, rather than selling proxies for its use, and

- The ability to separate electricity provision from companies that used it; Insull would benefit from better bulbs no matter who made them, not to mention other products that might consume electricity.

The earliest appliances were a primitive instance of leveraging undocumented APIs: a socket doesn't know what's in it. So early appliances plugged into light sockets. This was wonderful news for power companies. They directly smoothed out load because using an iron or washing machine meant not using light—so the best time to run appliances was during the day, when demand was low.

The availability of lighting changed many industries, and changed domestic life: it was possible to stay up later entertaining or reading; factories with expensive equipment could run night shifts to amortize its value; surgeons could peer inside bodies better; trains were equipped with powerful lights, which made them safer—and that allowed them to run faster, which was equivalent to increasing freight capacity without laying another foot of track.

These changes were big, but in many ways they were hard to measure through conventional economic statistics. If you replaced a gas lamp with a cheaper light bulb, you were reducing GDP. The light was much better, but since the product was both better and cheaper, it would be hard to apply a hedonic quality adjustment. Faster trains are still just trains, and a night shift at a factory shows up as an increase in labor inputs, not output per hour.

Industrial uses of electricity had a less obvious impact, but this source of energy was deeply transformative to manufacturers. In part, this was because it was a recursive invention: it raised the demand for copper, but electric smelting raised the supply in turn. But the biggest impact they had was reshaping the factory, and then changing how companies related to capital markets.

Before electrification, factory power was centralized: a single power source, usually steam or water, would turn a central shaft, and all the powered devices in the factory would connect to it through a system of belts and pulleys. This was mechanically inefficient (power is lost when it's transmitted this way) and led to a default layout where equipment was distributed radially around a single shaft. This made the optimal factory a multi-floor building; the most physically efficient approach would have been a cylinder, but they compromised with a cuboid.

This had several effects:

- There was a lot of inefficiency involved in moving materials up and down to different floors.

- The factory had many moving parts, all of which were dependent on one another. This led to a kludgy system; dripping oil on factory components to keep them lubricated, for example, and fixing belts and pulleys as they wore out.

- All that friction, not to mention oil dribbling everywhere, made factory air filthy. (Factory owners were not especially worried about how indoor air quality would affect their workers, but clothing manufacturers did worry about selling dirty, oil-sodden clothes.)

- Factories had to consume 100% of their available power even if they weren't actually using it; everything was connected to the central system.

- Factories had to be located near moving water, or in places with abundant coal. Since fuel had to be moved directly to the factory, it made sense to either locate factories in places with dense transportation networks (like cities) or where coal was mined.

- It was unsafe; a multistory building with an inferno in the basement, full of rapidly-moving pulleys, is a deathtrap.

- Replacing a power source was a large one-time investment, that probably meant redesigning the entire factory. Since the factory was built with assumptions about available power, either a) any replacement of the main power source with a higher-power one had to coincide with buying additional equipment that would consume more power relative to the space it took up, or, much more likely, b) factories would be run on their old design until they were no longer profitable.

Electrification completely changed this. Now, new equipment could just use additional centrally-generated power. Instead of expanding a business by building a new factory, manufacturers could expand by adding new assembly lines to existing factories. This meant that factories could be spread-out single-story buildings, and didn't need to be quite so sturdy. And they didn't have to be nearly so choosy about location. (The internal combustion engine accelerated this, too, by giving workers more commuting options. The Second Industrial Revolution was a really fortunate time.) Adoption took a while, because of the large base of fixed investment: electric motors were barely in use in 1900, were about 20% of factory power in 1910, 50% by 1920, and 80% by 1930.

Electrifying Finance

Electrical power was more efficient, and it allowed safer factories to operate in places that they couldn't before. But the change was not just a change of degree: electrification reduced the natural increment of capital investment. Instead of discrete expansion—two factories to three—a company could expand one factory by 5%, or cut another's staff and output by a third, or otherwise make small adjustments.

At the time, capital markets basically viewed a stock as a very low-quality species of bond. The usual waterfall for operating earnings was:

- Bondholders get paid first.

- If there are profits, preferred shareholders get a fixed dividend, at a higher rate than bondholders' yields. (If there are no profits, the preferred stock accumulates dividends.)

- If the preferred stock's dividend has been paid, then and only then can the company declare a dividend for common stock, distributing excess profits.

Since growth happened through discrete increments, the usual way it happened was that a company would raise money: it would grow by issuing stock and bonds to make one big investment. So a company might have bonds that paid 4%, preferred stock that paid 6%, and common stock that yielded 10%, but that 10% was very uncertain. In the 19th century, people quoted wealth in terms of annual income, not the market price of securities ($, Economist). The assumption was that market prices might fluctuate, but they wouldn't rise monotonically. And, with high dividend payouts, you wouldn't expect growth.

Most companies thought about their financing that way. For example, when IBM's predecessor, the Computing-Tabulating-Recording Company, was formed in 1911, one of the arguments for merging its disparate subsidiaries together was that diversification would make it easier to pay interest on bonds. It wasn't a diversified bet on growth, but a diversified bet on stasis, and one of Thomas Watson's earliest struggles at the company was to get them to cut the dividend so he could grow the business.

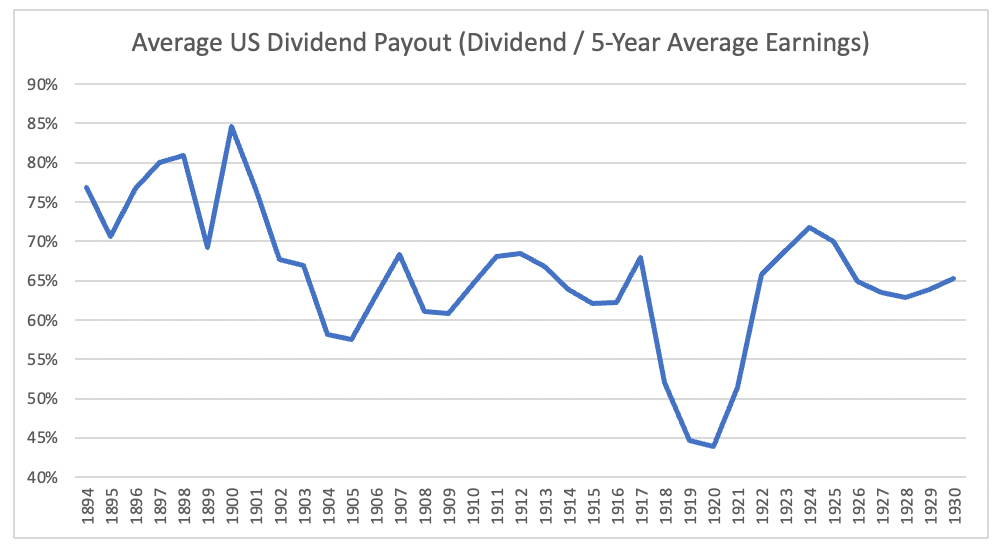

When companies can expand continuously, there's a justification for retaining earnings: instead of paying out a steady dividend, a company can pay out a growing dividend. It took a while for the theory to catch up (discounted cash flow analysis was invented by a German forester in the 19th century, and popularized (a bit) in by John Burr Williams in the 1930s. So investors did not know that a steady 10% yield is worth about as much as a 5% yield that grows a reliable 5% per year. But they did observe that some companies were able to retain earnings and grow, and that there was, in principle, no limit to this growth. You can actually see the effects in average dividend payouts over time:

So we can add growth stocks to the long list of inventions Thomas Edison was possibly responsible for and would definitely have taken credit for.

Cloud: Still the Early Days

Amazon Web Services, in classic Amazon fashion, was born by ping-ponging back and forth between high-level inspiration and ruthless, data-driven optimization. The name originally referred to something very different from the current incarnation: in 2002, Amazon tweaked the format of its affiliate links to let affiliates display links in whatever format they wanted to, and use some of Amazon's data for recommendations. So instead of dropping in a pre-formatted link to a specific product, affiliates could build whatever recommendations they want, re-skin the entire Amazon interface, let people traverse the graph of related products, or otherwise experiment with Amazon's data. This could have been a flop—asking people to code means telling 90% of people the product is not for them—but turned out to be a hit.

Data in hand, they launched a more powerful version a few months later, and discovered something interesting—some of the users were inside the company. A well-documented public-facing API was actually easier to use than Amazon's internal tooling.

This backstory helps put the notorious Steve Yegge memo into better context. There was a top-down mandate to run Amazon as a series of open APIs, because when they experimented with it, that was what Amazon's internal developers needed but didn't necessarily ask for. And if a company of a few thousand people, that's supposed to have a tool already, is willing to one meant for external users, then the market for that tool is immense.

Amazon is much more than a financial engineering play, but it is interesting to look at it as one: the everything-is-an-API approach and Amazon's unique management practices (single-threaded leaders, powerpoint bans six-page memos that are silently read at meetings), and their previous use of debt indicate that the company has a very particular attitude towards financing: the cost of organizational and technical debt compounds at ever-escalating rates, while the cost of other kinds of capital just keeps getting lower. AWS is just one more way that Amazon lowers the marginal organizational cost of adding headcount, and lowers the incremental technical overhead of adding features.

A simple way to think about AWS, and infrastructure-as-a-service generally, is to think of every part of the von Neumann architecture, and imagine selling access to it, and pricing different levels of latency at different levels. That doesn't get you all the way there, but it's a close approximation. And what's convenient about this model is that when you buy a computer one system resource at a time, you pay for exactly what you want—which means when you sell it one system resource at a time, you get a good sense of where the demand curves are, which tells you the most effective way to distribute your capex. If there's a price-sensitive customer for low-latency/low-reliability hardware, for example, that acts as a subsidy to buying the same hardware to service a latency- and reliability-sensitive customer.

Take all the different mixes of general computing, specialized processes, storage at various latencies, memory, and the more or less endless long tail of AWS products, consider that every one of them has a demand curve and that Amazon sees all these demand curves, and then consider that Amazon has a better view into the cost of providing some mix of services than anyone else, and it's easy to see where AWS's economics come from.

(The other place they come from is that Amazon had a big head start in a business with economies of scale. When AWS launched, Amazon's core business was retail. All of their plausible competitors were in the software business. Until very recently, an AWS-like service would have been bad for the margins of any company that could plausibly launch it, except Amazon.)

Converting as much computation as possible into a series of marginal costs impacts economics, but the real impact is on certainty. This is an old observation. Here's a quote from an engineer:

There were many companies which introduced IAAS because we engaged to save from 20 to 60 percent of their datacenter bills; but such savings as these are not what has caused the tremendous activity in cloud computing that is today spreading all over this country... those who first introduced the cloud on this basis found that they were making other savings than those that had been promised, which might be called indirect savings.

The slightly old-fashioned diction might give it away, but this is a lightly modified quote from an engineer at the Crocker-Wheeler Electric Company, talking about why switching to electric motors was a big deal. The direct cost savings were there, but the real savings were from invisible organizational costs that simply went away.

If electrification changed factory economics from discrete to continuous, what does cloud computing do? My bet: Bounded unit costs allow companies to raise money based on TAM and an estimate of long-term margins, since they reduce execution risk and make incremental economics much more certain. Early-stage but post-traction valuations have, superficially, gotten completely insane in the last few years. But there's sanity embedded in those valuations: there's less that can go wrong. For some companies, it won't make sense to rely on someone else's infrastructure for growth, but any company that can articulate a reasonable payback period can now extrapolate much further into the future than it used to be able to. And, as I noted in the Zoom writeup yesterday, they can handle 30x increases in usage, off an already impressive base, without breaking down. Discrete math is powerful, but calculus explained planetary motion and got humans to the moon; turning cost structures into something easy to model has created many more metaphorical rocketships since.

Further reading: I highly, highly recommend Working Backwards, a book about Amazon from two longtime executives. One of the best management books I've read, although it's unclear how many people thrive in an Amazon-style environment. Still, the book made me think it would be worth it to work there for a few years and then get brutally and ignominiously fired. The Everything Store is a good history of Amazon, although it was published in 2014, so it's more early Amazon. The author, Brad Stone, has a new book on Amazon coming out in May, which, if the company is on the ball, will also be a history of early Amazon. Age of Edison is a very informative social history of the impact of electric lighting. And The Merchant of Power is a solid biography of Samuel Insull. From Shafts to Wires: Perspectives on Electrification is a good read on how factories changed due to electrification.

I had long suspected that electricity changed the math of corporate finance; the idea shows up in this piece in 2019, for example. But I couldn't find any good numbers on long-ago dividend payout rates. I ultimately concluded that no reasonable person had the time to manually compile all that data. And that was exactly right! Fortunately, a very unreasonable person, one Alfred Cowles, did set out to produce the world's most thorough market data collection in the 1930s. Using "1,500,000 work-sheet entries" and "25,000 computer hours," he produced this masterpiece. If you've ever wanted to know how much the lead and zinc industry cut dividends in 1921, and how their stock prices responded, Cowles is your guy. Peter Bernstein once noted that "His achievements were noteworthy in his own time, and few scholars of any era have been as thorough, as creative, and as helpful to others." And they were very helpful to me.

Disclosure: I own some AMZN.

Elsewhere

IPO Demand Curves

Last year, I argued that IPO pops would be smaller if retail investors could directly participate. Institutional investors care a lot about valuation, at least valuation relative to other public companies; retail investors are much more likely to enter market orders. Sometimes, they just like the stock ($, WSJ). Robinhood seems to be working on this: in addition to letting users invest in their IPO, they're building a tool to let other companies raise money from retail investors. The usual institutional buyers may be annoyed by this, but IPO cycles don't last forever, and late in the cycle it's usually preferable for someone else to be stuck with the inventory.

Blackout Insurance

Berkshire Hathaway is a conglomerate with a large insurance operation, whose other businesses happen to include energy. The company has proposed a nice hybrid of these businesses, building extra generating capacity in Texas to provide insurance against a power outage. There is, naturally, an insurance layer to this deal, too: the proposed company "would offer a $4 billion performance guaranty provided by an investment graded counter party." Building additional gas generation capacity, especially with excess storage, would reduce the odds of another blackout (although the last one demonstrated the grid's vulnerability to cascading errors). Eyeballing the deal, it looks like a decent option: the cost would be $8.3bn (really, a fixed return on an $8.3bn investment) in exchange for avoiding a problem that a) has happened once a decade, at least recently, and b) may have cost over $129bn. It's not the only solution to grid reliability, but it is one with a well-understood cost and payoff.

Theaters and the Streaming Option

Disney is moving a major Marvel release onto Disney+ instead of making it an exclusively theatrical release. Part of what makes the Marvel franchise so valuable is that the movie and TV show plots are a directed acyclic graph; if you insist on getting all the inside jokes and references, you have to watch all of a movie's dependencies before you watch the movie itself. This forces Disney to time some releases in light of other planned releases. It's another reason their pivot to streaming ($) was so fantastically well-timed; it dramatically improved the company's second-best option when theatrical attendance is still low.

Easy Comps

March 2021 will probably represent the all-time peak of misleading year-over-year charts. For example, as of yesterday US air travel is up a healthy 504% Y/Y, although it's still down 40% from 2019. But the summer is looking objectively good for US travel: airlines are adding flights to vacation destinations, hotel occupancy is tantalizingly close to 2009 levels, and vaccinations are still accelerating. There's a broad trend towards faster recoveries from specific bad events—at least as far as consumer confidence is concerned. And immense slack in the system (few planes have been scrapped, and nearly-empty hotels are not getting demolished) means that the travel business can absorb a lot of pent-up demand and revenge shopping. For the US and a few other countries, "normal" is nearly at hand, and a vacation to somewhere with lighter mask and distancing restrictions is basically a way to visit the old normal a few months early.

Permanent Shifts

Online grocery shopping was growing before Covid, briefly turned into an essential service, and has since reverted to normal growth from a higher baseline. One piece of evidence for this is the growth of cold storage warehouses ($, WSJ). The broad post-Covid trend in retail is a blurring of online and offline shopping from the consumer's perspective, and a coincident blurring of the difference between a store and a fulfillment center. That trend, too, has antecedents; a Costco is basically a warehouse with fewer forklifts and more cash registers. But now it's universal.

Science fiction authors sometimes trip over themselves by imagining energy as a futuristic unit of currency. Commodity-backed currencies are rare, and the best ones use the most useless commodities, not the most useful. And as earlier discussions of the grid indicate, it's very hard to have a Central Bank of Energy, and hard to support arbitrary withdrawals and deposits to the energy ecosystem. Databases scale better than batteries. ↩

As a broad rule: the more general the product is, the more lower prices stimulate demand. The more specific, the more you can get away with charging high prices. So brokerages win when they cut commissions to near-zero or zero; research providers win when they make the best analysts very expensive to reach. Leisure airlines have found that price cuts stimulate travel demand; they lead directly to more travel. But business travel is popular in part because it's an expensive signal, and adding a bunch of business-class seats to a randomly-selected small town will not make that town more likely to get a Fortune 500 company to headquarter there. ↩

There are two ways to conceive of an assistant job. One is serializing, by streamlining particular processes that the CEO has to do but doesn't have time to do (responding to high-urgency/low-importance emails, scheduling, setting up travel). And the other is parallelizing, trying as much as possible to clone the CEO's behavior in order to give them a productive 48-hour day instead of the usual 24. That kind of job is rarer, and can lead to future CEO roles—it seems to describe Jamie Dimon's job working for Sandy Weill. And sometimes they bleed together; when Andrew Carnegie worked as an assistant to the manager of the Penn Central, his boss once got an urgent telegram while he was out of the office; Carnegie impersonated his boss to answer the telegram and resolve the crisis, and got promoted rather than fired. ↩

Byrne Hobart

Byrne Hobart